On the other hand, Apache Airflow also offers multiple packages that vary in what they have to provide. Then there is a core, pro, teams, and enterprise packages, with the last offer being completely customized according to the needs of the business.

Apache airflow alternatives free#

pricing starts at a completely free package but has so many features to offer. The difference between the two tools lies in the pricing as well. Not just that, but it offers various other features that make managing and automating the workflows a lot easier. This feature facilitates when there are multiple integrations or triggers happen. Moreover, it connects to API and allows all sorts of integrations with built-in tools.Īnother article states that has the best key feature, which allows the user to watch real-time data going throughout the workflow steps. :Īs per an article on the best iPaaS software, is a free no-code tool that integrates with multiple apps and allows automation.

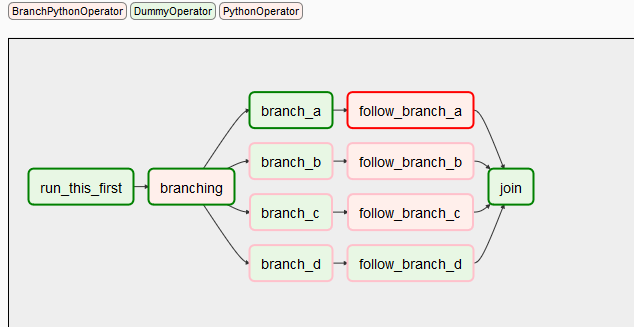

There is no good way to pass unstructured data (e.g., image, video, pickle, etc.) between dependent tasks in Airflow. The best thing is that it lets you restart from any point.Īccording to the article on medium, Apache Airflow is not designed to pass data between dependent tasks without using a database. Since it is not a library, it is better that you use it for large data work and not for small ETL. Apache Airflow:Īs per Integrate.io, Apache Airflow is ideal to use for long ETL processes which have multiple steps. Here are the reviews regarding Apache Airflow and. This enables the potential users to understand what the particular has to offer. Picking which solution was best was always a challenge.One thing that helps in identifying the features of both apps is the reviews that the users give after their first-hand experience. Whether through custom-developed infrastructure or no-code drag and drop vendor sold options. But there always has been 1000 different ways to transport data from point A to point B. It may feel like there are a lot of data pipeline solutions today. Mehdi (mehdio) Ouazza - mehdio DataTV, - “Airflow was the first tool I used running an on-prem system” Matthew Weingarten - Senior Data Engineer Disney Streaming - “At my first company we used TIBCO as our enterprise data pipeline tool” Sarah Krasnik - Founder of Versionable - “I’ve actually only ever used Airflow” Joseph Machado - Senior Data Engineer Linkedin - “I started running python scripts locally” Some started with Airflow, others with SSIS, and others data integration systems that I had never heard of. However, everyone started in different places. Perhaps most people would start in SSIS or cron and bash scripts. When I started interviewing other data engineers on which data pipeline solutions they started with I assumed there would be some level of consistency in their answers. Instead, I have interviewed several other data engineers to see what they like about Airflow and what they feel is lacking. Of course, I didn’t just want to state my opinion. So in this update, I wanted to talk about why data engineers love/hate airflow. Many of which don’t become obvious until a team attempts to producitonize and manage Airflow in a far more demanding data culture. But Airflow has its fair share of quirks and limitations. In turn, this has led to many new frameworks in the Python data pipeline space such as Mage, Prefect and Dagster. We have seen its Airflow-isms( Sarah Krasnik ). Now, nearly a decade later, many of us have started to see the cracks in its armor. įor those unaware Airflow was developed back in 2014 at Airbnb as a method to help manage their ever-growing need for complex data pipelines and it rapidly gained popularity outside of Airbnb because it was open-source and met a lot of the needs data engineers had. Whether it’s 100% using Airflow and its various operators or using Airflow to orchestrate other components such as Airbyte and dbt. One framework that many companies utilize to manage their data extracts and transforms is Airflow. Data pipelines are a key component in any company’s data infrastructure.

0 kommentar(er)

0 kommentar(er)